Web-Scraping

What part of a computer does a spider use?

The webcam.

Intro and Foundations

Ever looked at huge data reviews and visualizations wondered where the data comes from? Chances are, the creators of these often use a technique called web-scraping: which allows them to automatically parse through data on the web in an extremely efficient manner. Lets apply this web-scraping to create a recommender system for movies and tv shows based off a user’s favorite movie!

🎥1

Here’s a link to my project repository.

Inside my scraper file, we will be utilizing something called a “spider” which will be our primary tool to scrape IMDB. Our spider can be split up into three main parts: the parse method, the parse_full_credits method, and the parse_actor_page method.

First, we must initialize our spider! To do so, we will be using the scrapy framework.

To create a file location for our spider, we are going to be using the terminal.

In our terminal we input the following code: scrapy startproject IMDB_scraper (scrapy must be loaded in the environment already) Afterwards, we can navigate to the IMDB_scaper file we have created, and go into the spiders directory to create our imdb_spider.py file.

To initialize our file, we will do the following…

import scrapy

class ImdbSpider(scrapy.Spider):

name = 'imdb_spider'

start_urls = ['https://www.imdb.com/title/tt6710474/']

Now that we have created our project and initialized our spider, lets go over our methods together!

Parse Method

def parse(self, response):

'''

This method will start on the start_url, and navigate to find the full_credits url on the page.

It will pass that url to the parse_full_credits method and will not output data.

'''

#contains all the html of related items to our full_credits page

list_of_links = response.css("li.ipc-metadata-list__item a.ipc-metadata-list-item__label")

titles = list_of_links.css("::text").getall() #list containing all text in our list_of_links

hrefs = list_of_links.css("::attr(href)").getall() #list containing all the hrefs in our list_of_links

#BECAUSE titles and hrefs original from the same response.css, their indexing is the same. We take advantage

#of this fact to parse out the href we desire in order to advance to the full_credits imdb page for our movie

for i in range(len(titles)):

if titles[i] == 'All cast & crew':

next_page = hrefs[i]

full_url = response.urljoin(next_page)

yield scrapy.Request(full_url, callback = self.parse_full_credits) #call parse_full_credits method with new url

Our parse method is what the spider will initially execute first. For our example today, we will be using the following movie Everything Everywhere All at Once to see how our spider operates. Our parse method will make our spider find the reference link to the full cast page from our base movie page, and then send that link to the parse_full_credits method.

Parse_Full_Credits Method

def parse_full_credits(self, response):

'''

This method will start on the full_credits url found from the parse method.

It will navigate the page to find the links of all actors/actresses in the cast.

It will pass those urls to the parse_actor_page method and will not output data.

'''

#create list of actor links within cast/crew page

actor_list = [a.attrib["href"] for a in response.css("td.primary_photo a")]

#for every actor in the actor_list, lets call the parse_actor_page method

for actors in actor_list:

actor_link = str(response.urljoin(actors))

yield scrapy.Request(actor_link, callback = self.parse_actor_page)

Now that our spider is in the cast/crew page, we are going to extract the links for every single actor/actress that took a part in the main cast for our movie. Afterwards, our spider will send each link to our parse_actor_page method.

Parse_Actor_Page Method

def parse_actor_page(self, response):

'''

This method will start on an actor/actress's page.

It will navigate the page to find the movies/shows that they have participated in their whole career.

It will output a dictionary containing the actor's name and the name of a show, for every single show on their webpage.

'''

#actor name

actor = response.css("span.itemprop::text").extract()[0]

#obtains all the names of the movies/shows the actor was in

movie_names = response.css("div.filmo-row b a::text").getall()

#for each movie, yield the following dictionary

for movies in movie_names:

yield {"actor" : actor, "movie_or_TV_name" : movies}

In this method, our spider is scraping though the links of every single actor’s page who participated in our movie of choice. It then scrapes all the names of the movies/shows the actor was in, and yields a dictionary containing the actor’s name and the name of the media the actor was in for every single movie/show on the actors list.

🎥2

Now that we have finished writing our spider. Lets actually use it!

In the terminal we can call scrapy crawl imdb_spider -o movies.csv, which will write out our ouput into the movies.csv file. You can see an example of what the movies.csv file should look like here.

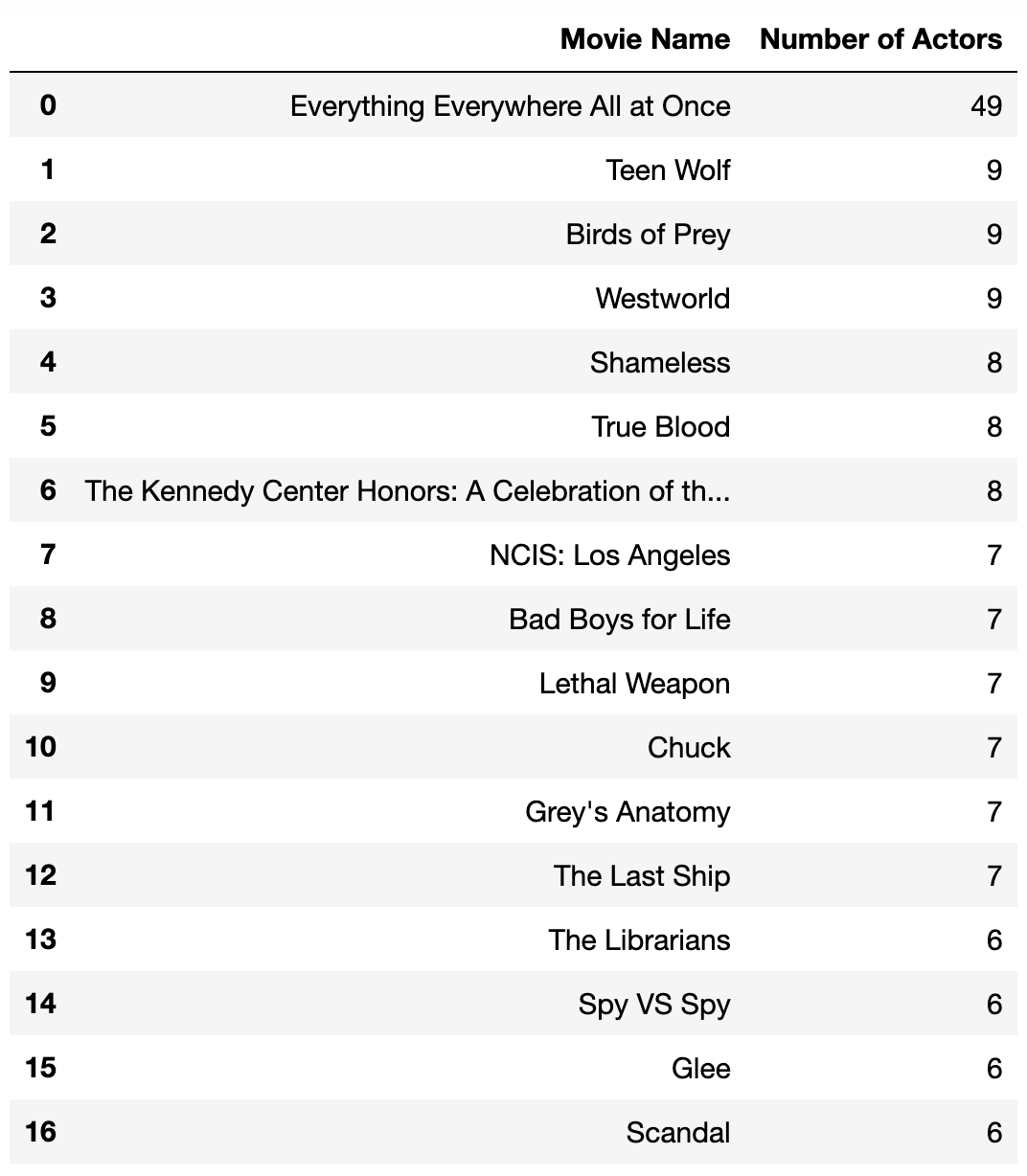

Below is a more order version of our csv file, now ordered by most shared actors per movie name.

We can also look at our data in a more visual way!

As we can see from the table and graph, obviously the most common show between the actors is the core movie we chose to scrape from.

Hopefully this blog was able to inspire you to embark on some webscraping journies yourself! Thanks for reading!